Deep learning has become the backbone of modern artificial intelligence, powering applications like image recognition, natural language processing, and autonomous vehicles. ResNet architecture stands out as a groundbreaking milestone among the many innovations in this field. With its unique structure and problem-solving capabilities, ResNet has changed how neural networks are designed and trained. This article dives deep into the details of ResNet architecture, exploring how it works, its significance, and why it continues to influence AI Research today.

What Is ResNet Architecture?

ResNet, short for “Residual Network,” is a type of deep neural network introduced by researchers at Microsoft in 2015. The key idea behind ResNet architecture is the concept of “residual learning,” which helps tackle one of the biggest challenges in deep learning: the vanishing gradient problem. This challenge arises when training intense networks, causing the gradients (used to update weights during training) to become so small that they stop contributing effectively to learning.

ResNet addresses this issue by adding shortcut connections, called “skip connections,” between layers. These shortcuts allow information to bypass one or more layers and flow directly to subsequent layers. This simple yet powerful idea has paved the way for building intense neural networks, with some ResNet models going up to hundreds or thousands of layers!

Key Features of ResNet Architecture

Several key features characterize resNet architecture:

- Residual Blocks: These are ResNet’s building blocks. A residual block adds a layer’s input directly to its output, effectively skipping some computations.

- Skip Connections: These connections prevent information from getting lost as it flows through the network.

- Ease of Training: Thanks to skip connections, ResNet networks are more accessible to train, even with many layers.

- Scalability: ResNet can be scaled to hundreds or thousands of layers without significant performance degradation.

Let’s unpack these features in detail to understand what makes ResNet architecture such a game-changer.

The Challenge of Training Deep Networks

Before ResNet architecture, researchers faced significant challenges when training intense neural networks. While adding more layers to a network theoretically allows it to learn more complex features, in practice, this often leads to the following:

- Vanishing Gradients: As gradients are backpropagated through the layers, they can shrink exponentially, making it difficult for the earlier layers to learn.

- Degradation Problem: Surprisingly, adding more layers often resulted in worse rather than better performance. This is because deeper networks needed help to optimize correctly.

ResNet architecture elegantly solved these problems. ResNet ensured that information and gradients could flow freely through the network by introducing residual blocks and skip connections, even in very deep architectures.

How Residual Learning Works

Residual learning is the foundation of ResNet architecture. Instead of learning a direct mapping from input to output, a residual block learns the residual—or the difference—between the input and the output. This can be expressed mathematically as:

F(x) = H(x) – x

Here:

- H(x) is the desired output.

- x is the input.

- F(x) is the residual, the part of the output the network needs to learn.

By rearranging the equation, you get:

H(x) = F(x) + x

This equation is implemented using a shortcut connection that directly adds the input x to the residual block output. This simple operation helps the network focus on learning the residual function, which is often easier than learning the complete mapping.

Anatomy of a Residual Block

A residual block typically consists of:

- Two or more convolutional layers

- Batch normalization layers to stabilize learning

- An activation function, usually ReLU (Rectified Linear Unit)

- A skip connection that adds the input to the output

The output of a residual block can be expressed as:

y = F(x, {Wi}) + x

Here, F(x, {Wi}) represents the output of the convolutional layers, parameterized by weights Wi. The addition of x ensures that the input information is preserved.

Why Skip Connections Are So Important

Skip connections are the heart of ResNet architecture. But why are they so important? The answer lies in their ability to solve the vanishing gradient and degradation problems:

- Preserving Information: Skip connections allow the network to pass information directly, ensuring that essential features are not lost.

- Improved Gradient Flow: Gradients can flow through the shortcut connections without being diminished, making it easier to train deep networks.

- Reduced Complexity: The network can optimize faster and more effectively by focusing on learning residuals instead of complete mappings.

These benefits have made skip connections a standard feature in many modern neural network architectures, not just ResNet.

Variants of ResNet Architecture

ResNet architecture comes in several variants, each designed for different applications and levels of complexity. Some of the most popular ones include:

- ResNet-18: A lightweight model with 18 layers, suitable for smaller datasets and tasks.

- ResNet-34: A slightly deeper version with 34 layers, offering improved performance.

- ResNet-50: A widely used variant with 50 layers, incorporating bottleneck layers for efficiency.

- ResNet-101: A deeper model with 101 layers designed for more complex tasks.

- ResNet-152: One of the most bottomless ResNet models, with 152 layers, offering state-of-the-art performance on large-scale datasets.

Here’s a comparison of these variants in terms of their architecture and performance:

VariantNumber of LayersParameters (Millions)Top-5 Accuracy (%)

ResNet-18 18 11.7 89.5

ResNet-34 34 21.8 91.0

ResNet-50 50 25.6 92.2

ResNet-101 101 44.5 93.3

ResNet-152 152 60.2 93.8

These variants demonstrate the scalability of ResNet architecture, making it suitable for a wide range of applications.

Applications of ResNet Architecture

ResNet architecture has been applied across various domains, revolutionizing how deep learning models are designed and used. Some critical applications include:

- Image Classification: ResNet models have achieved state-of-the-art results on benchmark datasets like ImageNet.

- Object Detection: ResNet is often used as a backbone for object detection frameworks like Faster R-CNN.

- Medical Imaging: ResNet-based models detect diseases and anomalies in medical scans.

- Natural Language Processing (NLP): While primarily designed for vision tasks, ResNet-inspired techniques are also used in NLP.

- Generative Adversarial Networks (GANs): Skip connections from ResNet are employed in many GAN architectures to improve training stability.

The versatility of ResNet architecture highlights its importance in the field of artificial intelligence.

Advantages of ResNet Architecture

The success of ResNet architecture can be attributed to its numerous advantages:

- Better Accuracy: ResNet consistently outperforms traditional networks with the same number of layers.

- More accessible Training: Skip connections enable training and deeper networks without significant challenges.

- Flexibility: ResNet can be adapted for various tasks and domains.

- Reusability: Pre-trained ResNet models are available, making it easier to fine-tune them for specific applications.

Limitations of ResNet Architecture

While ResNet architecture is highly effective, it is not without limitations:

- Computational Cost: Deep ResNet models require significant computational resources.

- Overfitting: With proper regularization, ResNet models can fit on smaller datasets.

- Complexity: The architecture can be challenging for beginners to implement and optimize.

Despite these limitations, the benefits of ResNet architecture far outweigh its drawbacks.

Future of ResNet Architecture

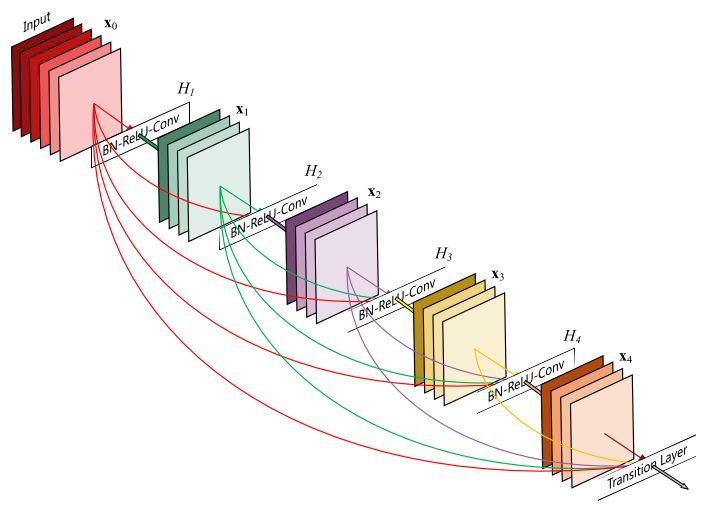

ResNet architecture has laid the foundation for many subsequent innovations in deep learning. Researchers have built upon its principles to develop even more advanced architectures, such as DenseNet, EfficientNet, and Transformer-based models. However, the core idea of residual learning continues to inspire breakthroughs.

As AI evolves, ResNet architecture will remain a cornerstone of deep learning Research, driving progress in computer vision, robotics, and beyond.

Conclusion

ResNet architecture is a shining example of how simple ideas can lead to transformative advancements. ResNet solved critical challenges in training deep networks by introducing residual learning and skip connections and unlocked new possibilities in artificial intelligence. Its impact can be seen across countless applications, from image classification to medical diagnostics.

As we look to the future, one thing is clear: ResNet architecture will continue to inspire innovation and push the boundaries of what deep learning can achieve. So, whether you’re a researcher, a developer, or just someone curious about AI, ResNet is a name worth remembering.

ResNet architecture doesn’t represent more than a technical innovation—it’s a testament to the power of creative problem-solving in advancing human knowledge.